CEQ’s CE Explorer: You don’t know what you’ll run into!

On June 5th, the CEQ’s Permitting Innovation Center released their first prototype experiment, the Categorical Exclusion Explorer, at https://ce.permitting.innovation.gov. The Explorer is the first time a collection of cross-agency Categorical Exclusions (CEs) has been transformed into data for experimentation. This is still in the experimental stage, and we applaud the Permitting Innovation Center for releasing early-stage products and experiments. Early and iterative releases are ideal for gathering feedback and improving future releases.

We’ve discussed using existing environmental review data management and sharing as an area ripe for experimentation and innovation.

Why categorical exclusions matter

For those unfamiliar with the term, "categorical exclusion" or CE means a category of actions that a Federal agency has determined normally does not significantly affect the quality of the human environment within the meaning of section 4332(2)(C) of 42 USC. The plain language version of the definition could be, as we've written before:

Agencies are allowed to exempt lots of minor actions from review and even some major actions that have predictable impacts that someone has determined don't deserve a full review. And there are literally thousands of them (e.g. including four for different types of picnics). Often one agency has a useful category of exclusion that another agency could use for its projects - but can't because the way policy has evolved, each agency has to do its own. The law now lets agencies adopt categorical exclusions from their peers, as long as they talk about it beforehand and share information about doing so with the public. This is a great change that could allow lots of exclusions that benefit nature restoration be used by multiple agencies.

Finding a CE that matches a proposed project is ideal for almost any type of project. It still means paperwork and planning, but finding an appropriate exclusion typically dramatically reduces the time and cost of a review. It's not an automatic "advance to GO and collect $200," but it puts you closer to a decision.

Under the old CEQ regulations, one agency could not use another agency’s CE without first going through a full rule-making process, which could take two to four years. The Fiscal Responsibility Act changed that in 2023 by making it more streamlined for one agency to use another agency’s exclusions, but that has highlighted a deeper challenge–the lack of a single place to quickly find exclusions from across the agencies and identify those that are possibly relevant to a new project. Prior to the CE Explorer, CEQ had maintained a spreadsheet of each agency's CEs. It was a massive CSV file that doesn't provide any functionality beyond what an individual user might know about Excel formulas and pivot tables.

Using the CE Explorer

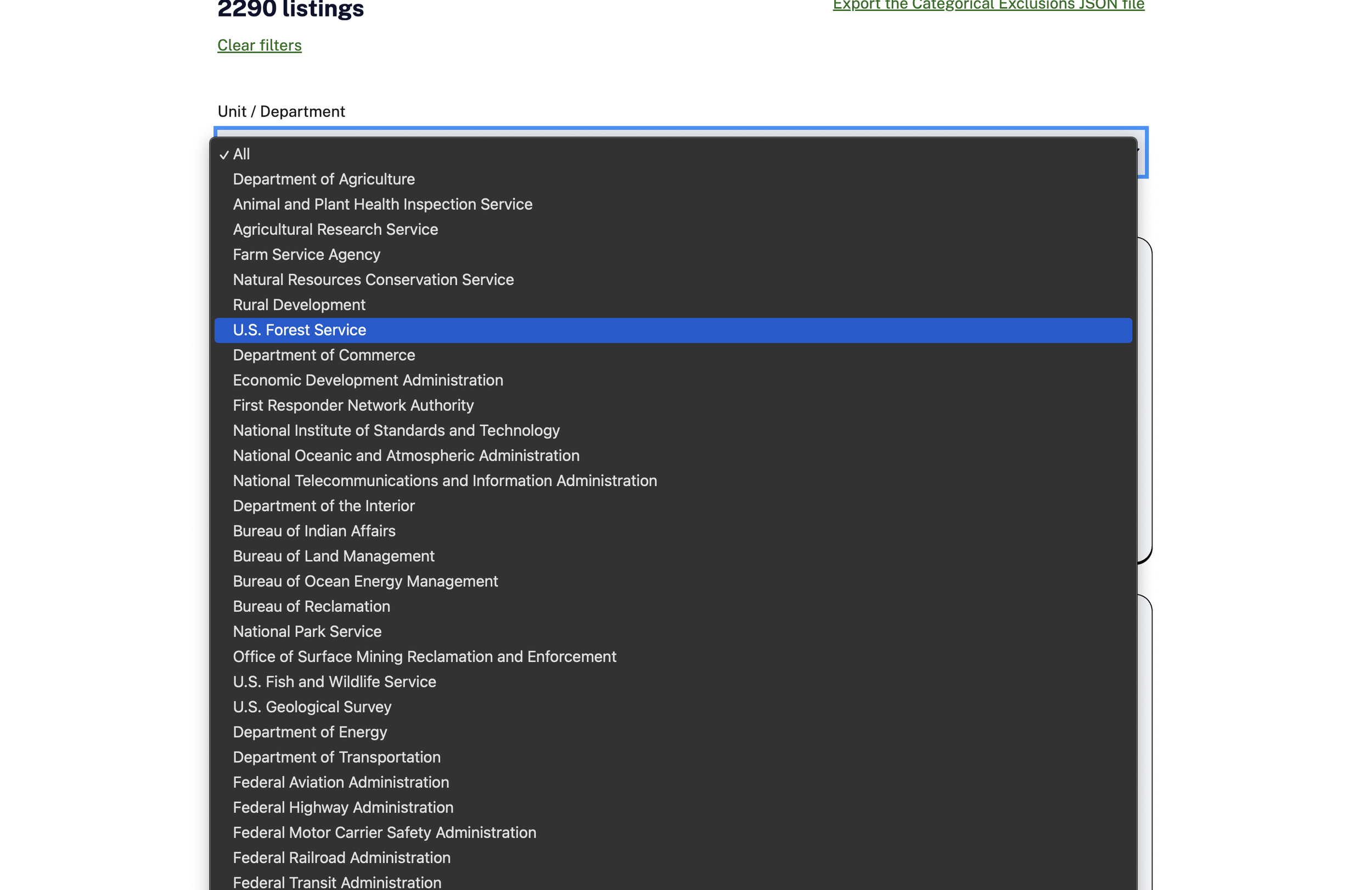

As launched, the explorer is a straightforward proposition: text search across 2,290 CEs and then the ability to filter the results by the agencies contained in the results. The results display in a card with details, from which the user clicks through to more detail, and a link to the official government website providing that CE. If you don't feel like searching, select an agency from the drop-down list to see all the CEs listed.

The opening screen of the Categorical Exclusion Explorer.

You can choose an agency such as the U.S. Forest Service without searching. I included a partial list of the agencies in the screenshot because it's one heck of a drop-down.

As you can see, the drop-down list is rather long.

The site quickly delivers the 33 results in the data set. It requires a bit of scrolling to see all the results, but let's focus on the first result because I always brake for bighorn sheep.

A sample set of results for selecting “U.S. Forest Service” from the drop-down menu.

If the details given in the CE don’t supply enough information, the page links to the official list of the U.S. Forest Service's CEs on the authoritative source at eCFR. The word "authoritative" is critical because the explorer uses data from a source that could be out of sync with the official details. The tool lets users explore the collection first and see if something might apply to their work or use case.

The details of a CE, in this case, from the U.S. Forest Service

Challenges

Part of the challenge in working with CEs is the language's specificity and officiality. That said, some common searches yield worthwhile results. For example, searching for "road repair" has fifteen CEs across twelve agencies. This search term highlights how many overlaps may exist across agencies.

A search result for “road repair.”

Because the content isn't in plain language, you must think in specific scientific and bureaucratic terms, which can be much more complicated than it sounds. Also, the results card takes up a lot of space, requiring some scrolling to work through a large set of results.

Where we hope this goes

The CE Explorer proves a searchable catalog is possible and worth iterating on and improving. Our initial wishlist would be:

The data set would be more helpful if future tool iterations allowed for some synonyms and related terms. That will require some help from subject matter experts and a little information architecture, both well within reach of the Permitting Innovation Center.

Design-wise, we hope they add more details to the interactions, sizing of the cards, and refining the interface, but we expect that from a 1.0.

Collecting all the existing CEs as a data format is the first step towards comparing, adjusting, finding commonality across agencies, and removing unnecessary CEs. Cross-agency CEs would be a huge step forward towards consistent standards for all involved in the permitting process, and eliminating CEs where they don’t make sense (like picnics!) will eliminate unnecessary permits.

Having the data source (as a JSON file as noted below) is another step towards making the CEs easier for the next steps to AI use cases - be they Natural Language Processing algorithms or training data for Large Language Models (LLMs).

Future methods to help identify often-used CEs to help users get a sense of which CEs are most likely to be relevant to them.

The site is lightning-fast, so kudos to whoever put it together in plain text and rendered it in the browser. Keep it as is, light and fast.

Closing thoughts

The tool will need updates and upgrades to be more useful, and we look forward to seeing what the Permitting Innovation Center does with it next. Or, if some other developer wanted to play with this as a prototype for their development, that would be something worth seeing. We also appreciate the dataset that runs the explorer, which is available directly from the site as a JSON file download.

We’ve added the CE Explorer to our Permitting Tools Inventory, with over eighty other examples of tools, interactive maps, and decision-making support across federal agencies, states, private companies, and non-profit organizations

OK, I'm off to read up on bighorn sheep during lambing season. Thanks for reading.

Photo of Bighorn sheep courtesy of the National Park Service, photo by Sally King